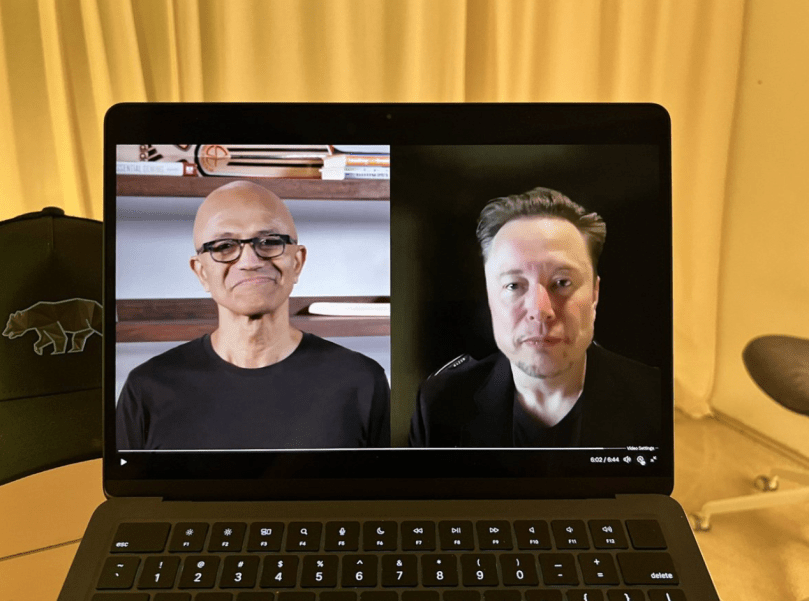

Satya Nadella: Thank you so much, Elon, for being here at BUILD. I know you started off, as an intern at Microsoft. You were a Windows developer and of course you’re a big PC gamer still. Uh, you wanna just talk about your early days with, Windows and the kinds of things you built?

Elon Musk: Yeah, well, actually I started before Windows with DOS. I had one of the early IBM PCs with MS-DOS.

I think I had like, 128K in the beginning and then it doubled to 256K, which felt like a lot. So I, yeah, programmed video games in DOS and then, later in Windows. Remember Windows 3.1?

Satya Nadella: Yeah! No, it’s wonderful. I mean even the last time I chatted with you, you were talking about everything, even the intricacies of Active Directory and, and so it’s fantastic, to have you at our Developer Conference. Obviously, the exciting thing for us is to be able to launch Grok on Azure.

I know you have a deep vision for what AI needs to be and that’s what got you to get this built. It’s a family of models that are both responsive reasoning models and you have a very exciting roadmap. You wanna just tell us a little bit about sort of your vision, the capability, you are pushing on both capability and efficiency? So maybe you can just talk about a little bit of that.

Elon: Sure! So yeah, with Grok, especially with Grok 3.5 that is to be released. It’s trying to reason from first principles — so apply the tools of physics to thinking. So if you’re trying to get to fundamental truth, you boil things down to the axiomatic elements that are most likely to be correct and then you reason up from there, and then you can test those conclusions against those axiomatic elements and inherant physics. If you violate conservation of energy or momentum, then either you get a Nobel Prize or you’re wrong. And you’re most certainly wrong, basically. So that is really the focus of Grok 3.5, it is sort of the fundamentals of physics and applying physics tools across all lines of reasoning. And aspire to truth with minimal error; there’s always gonna be some mistakes that are made, but aim to get to truth — with acknowledged error — and minimize that error over time, and I think that’s actually extremely important for AI safety.

So I’ve thought a lot for a long time about AI safety and, well, the conclusion is the old maxim that honesty is the best policy. It really is for safety. But I do want to emphasize, we have and will make mistakes, but we aspire to correct them very quickly, and we are very much looking forward to the feedback from the developer community to say, like, what do you need, where are we wrong, how can we make it better, and to have Grok be something that the developer community, is very excited to use and where they can feel that their feedback is being heard and Grok is improving and serving their need.

Satya Nadella: Yeah, I know it’s…It’s in some sense, you know, cracking the physics of intelligence is perhaps the real goal, for us to be able to use AI at scale. And so it’s so good to, you know, to take that first principles approach that you and your team are taking. And also you’re deploying this. I mean, one of the things about sort of what you do is, uh, you’re doing, you know, unsupervised FSD on one side, you’re doing robotics, and of course there’s Grok, so you’re deploying Grok across all of your businesses from SpaceX to Tesla, obviously at X. Uh, I’d love to even, you know, one of the themes for this Developer Conference, Elon is, we’re building pretty sophisticated AI apps, right? It’s not even about any one model, it’s about orchestrating multiple models, multiple agents. Uh, just anything that you are seeing in the real world application side, even inside of your own companies, when you think about even a Tesla or SpaceX, where you put Grok and these other, uh, AI models you’re building?

Elon: Yeah, it’s incredibly important, uh, for AI model to be grounded in reality. Reality, you know, like, like physics is the law and everything else is a recommendation. Which is, I’m not suggesting people break the laws made by humans, you know, we should generally obey laws of humans, but I’ve seen many people break human-made laws, but I have not seen anyone break the laws of physics.

So for any given AI, grounding it against reality… and reality, for example, as you mentioned with, with the car – it needs to drive safely and correctly, and the humanoid robot – Optimus – needs to perform the task that it’s being asked to perform. These are things are very helpful for ensuring that the model is truthful and accurate. It has to adhere to the laws of physics.

So I think that’s actually somewhat overlooked or at least not talked about enough, is that to really be intelligent it’s got to make predictions that are really in line with reality or in other words, physics. That is a really fundamental thing. And being able to ground that with cars and robots is very important. We are seeing Grok be very helpful in things like customer service, you know, the AI is infinitely patient and friendly and you can yell at it and it’s still gonna be very nice.

I think in terms of improving quality of customer service and sort of issue resolution, Grok is already doing quite a good job at SpaceX and Tesla and we would look forward to offering that to other companies.

Satya Nadella: That’s fantastic, I’m really thrilled to get this journey started, getting that developer feedback, and then looking forward to even how they deploy. There is these language models, there’s, you know, I think over time we will have this coming together of language models with vision, with action, but to your point, being really grounded on a real world model, um, and that I think is ultimately the goal here. And so, thank you so much, Elon, for briefly joining us today and we’re really excited about working with you and getting this into developers’ hands.

Elon: Today, thank, thank you very much and I can’t emphasize enough that we’re looking for feedback from you, the developer, the audience. Tell us what you want and we’ll make it happen. Thank you.

Transcript by Gail Alfar.